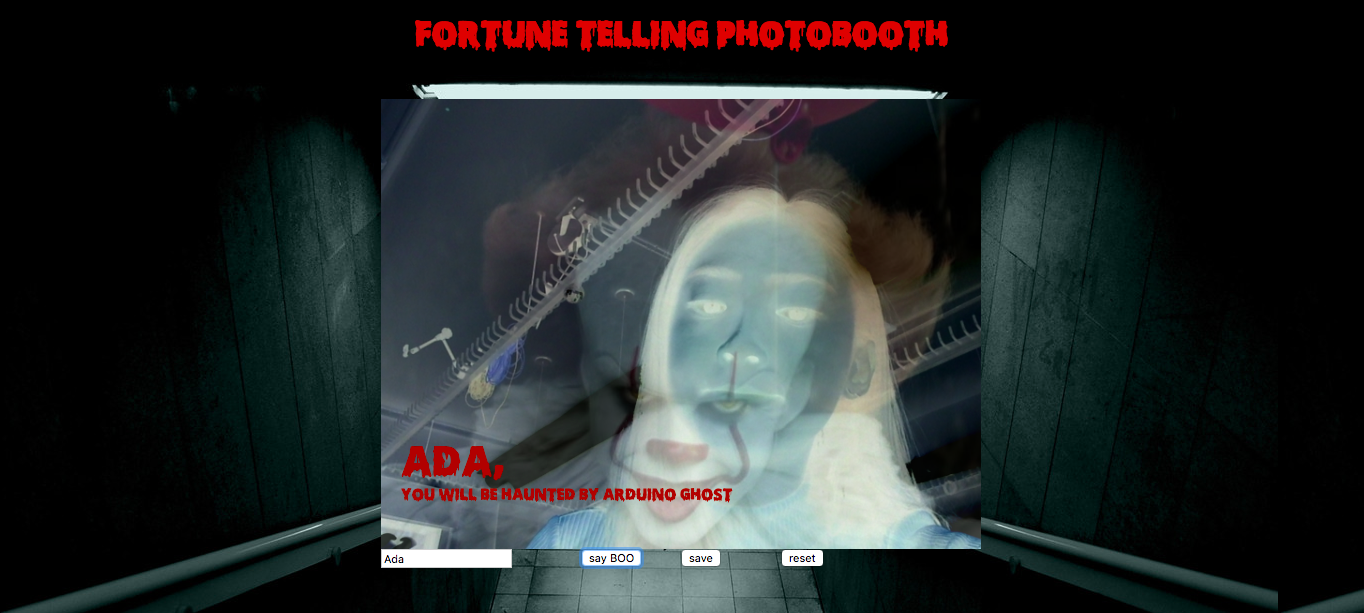

I combined this week's assignment with myHalloween themed Pcomp midterm. The idea is a spooky photo booth that tells fortune. Inspired by the transparency example , I thought about displaying a half opacity ghost/demon image as a filter on top of the webcam image. The DOM elements I added from p5 sketch are: a video capture (webcam = createCapture(VIDEO)) , a text input ( nameInput = createInput('type your name')), a button that calls takePic to freeze the video and display messages, a button that saves the pictures, and a button that reset the webcam back to active. The text input and button elements on the webpage are controlling the photo booth actions on the canvas with different callbacks. I also added a "Fortune telling photo booth" header inside index.html, and used @font-face to set the header font and added a background image inside css. My sketch: https://editor.p5js.org/ada10086/sketches/rksofdUim

To play photobooth: https://editor.p5js.org/ada10086/full/rksofdUim

Another project I made using DOM and HTML is an animal sampler for my Code of Music class. I created a drop down menu for selecting animal sounds using sel = creatSelect(). However, when I call sel.value() to get the animal name which I used to store my preloaded animal sound sample, I can only get a string: 'dog', 'cat', 'cow',etc. So I was wondering if there is a way to convert strings to variable names(to get rid of the ' ' ). I read somewhere people suggested using window[] or eval(), but none of them solved my problem. I had to find a way to use the string value, to load the sound and the image according to the string being selected. Therefore I have:

selectedAnimal = sel.value();

animal = loadImage('animalImage/' + selectedAnimal + '.png');

"F1": 'animalSound/' + selectedAnimal + '.wav'.

to include the the sel.value() within the file name when I load the image and sound files instead of calling a preset variable dog, cat, cow. However, this way I had to convert my sound and image files to make sure they have the same extension png and wav.

Another problem I had when I added the dropdown menu is I cannot change the size, of the texts in the menu, the size() function only changes the size of the menu box, but the text stays the same, I tried to change it in CSS and html file with font-size but nothing changed.

To get around with selecting animals using the dropdown menu, I thought about displaying all the animal images on the webpage underneath the canvas as image elements: dog = createImage(), and have dog.mousePressed() to select the sound sample, code.

However, I couldn't get the absolute positioning of the images correct. I was wondering how to set the absolute positioning using position() as well as in the html/css file.

One problem I had for both sketches is that when I tried to set the canvas to the center of the window, instead of using (windowWidth/2, windowHeight/2), I had to use ((windowWidth - width) / 2, (windowHeight - height) / 2), which confuses me a lot.

To play: https://editor.p5js.org/ada10086/full/SJ8-14tj7

My code: https://editor.p5js.org/ada10086/sketches/SJ8-14tj7