Made virtual gallery space with Probuilder, added sound mass models/animations to gallery unity

Checked in with Noah and Tak on Sound Mass composition

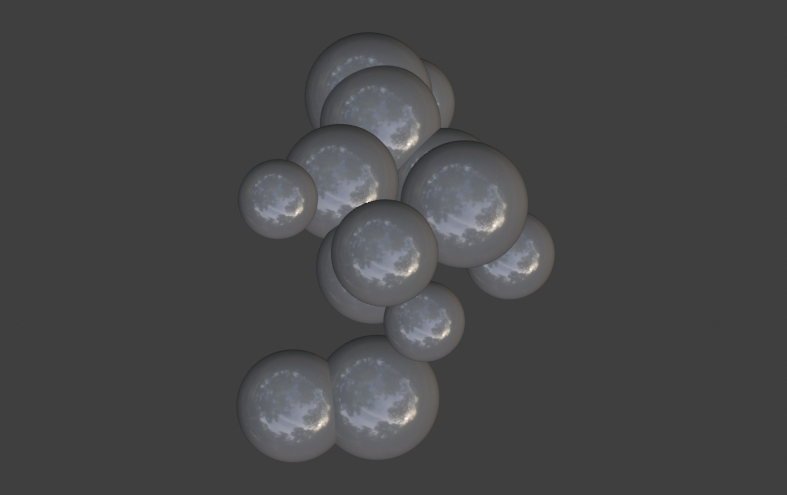

3d printed bubbles model

Carol help session on Unity lighting settings(fog), post processing effects(post process layer/post process volume on centerEyeAnchor)

4/15 Wednesday

reorganized sound mass models and imported .blend and .fbx with animations from Blender to Unity

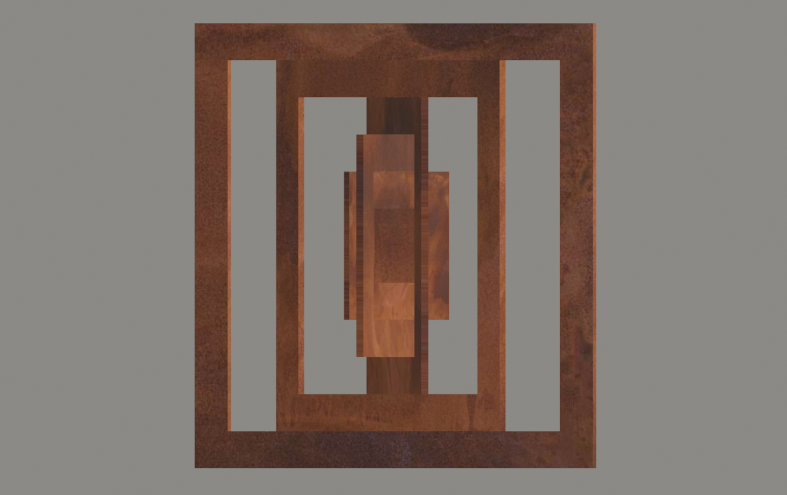

3d printed concentric squares sound mass model

built test app for Oculus Quest

4/14 Tuesday - Day 29

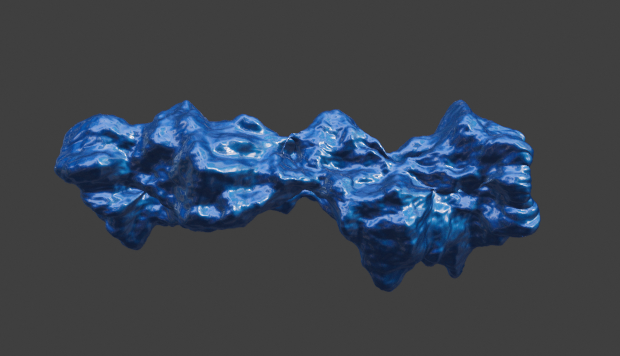

Reprinted form I extra fine

Fixed form I UV map in blender, fix Unity model target + animation size issue, sync animation with audio in Unity

3d printed spiral for sound mass

4/13 Monday - Day 28

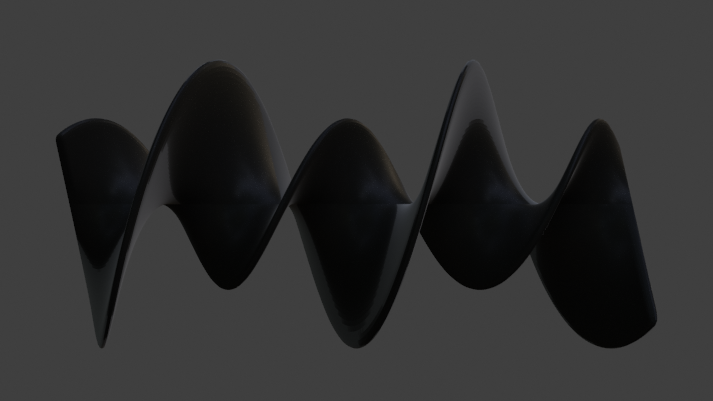

More sound mass models and animations

Office hour with Ben on 3d print finishings

In and out of reality class on event system, animator, collider, particle system

3d printed 2 models

Updates Noah and Tak on sound mass technique

4/11 Saturday - Day 27

Created more models for Sound Mass form

4/10 Friday - Day 26

recreate form II image target to improve on trackability

Created more models in blenders

4/9 Thursday - Day 25

Office hour with David

form I match dimension of model target and animation model

image scan raycast highlight

Office hour with Sarah

get really good documentation

further user testing with documentation not on concept but on work of art

focus on deepening/polishing existing forms

4/8 Wednesday - Day 24

Office hour with Robby

Fixed fractal shader gradient problem

Migrate Unity assets from V2018 to V2019, built and ran on device

4/7 Tuesday - Day 23

Thesis

Exported Noteflight score MIDI to Garageband, generated Form I audio using Clarinet

In Unity, animated texture offset of Model target’s overlay model’s shader with audio source current playback position

Quick and Dirty show

Coherence of visual style of objects in an exhibition setting

Continuity among the interactions

why do I care? what's the headline? where am I in this concept ? - state in the beginning

What's the one underlying big concept that connects all the forms, articulate in the beginning and reiterate in the end, for each form, state which facet of the concept I'm relating to

Form III what’s player’s goal ?

Form II - texture of the music is metaphor, interesting and rarely heard composing technique for a lot of people, make 3d object animation as prototype without physical model

Form I vs IV good contrast between classical and synthesized sound

Form III website more usable

Thesis small group meeting with Andrew, Jackie, Adi

minimal explanation on references more focus on my own process

juxtapose all the forms with minimal descriptions for each one

Jackie’s portrait of me as a potato on zoom

4/6 Monday - Day 22

Thesis

Office hour with David on Form I animation

Watched UV unwrapping tutorial

In Blender, created a UV map for Form I model by unwrapping the horizontal surface of the mobius strip to flatten out one full loop across the map, used a gradient play head(PS) as image texture for the UV map, in Unity, applied UV map to the texture of unlit/transparent shader

4/4 Saturday - Day 20

Thesis

Tested Form I object tracking reliability

Decided on Form II image

prepared design files for laser cut

replaced image target/audio in Unity

4/3 Friday - Day 19

Thesis:

Office hour with Yotam for conceptual feedback

Update form III score layout

Reward desirable behaviors instead of imposing restrictions

Player is the conductor, system is the orchestra. Player should have more authority and freedom over how the piece progresses.

Natural mapping between images and sound

Progression in the sections, intro - dev - ending

Concept transcends platforms - pivot to proof of concept with Max/MSP or web interaction

4/2 Thursday - Day 18

Thesis:

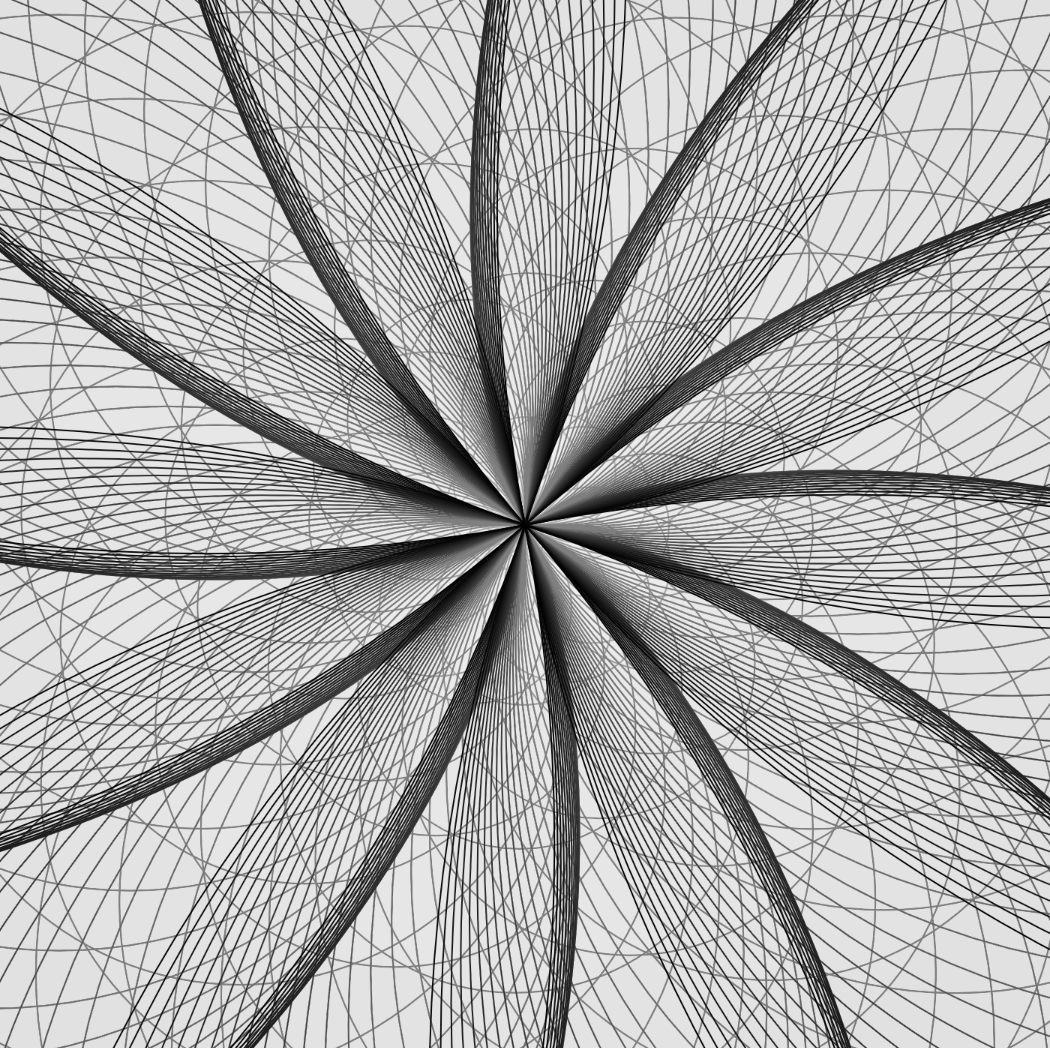

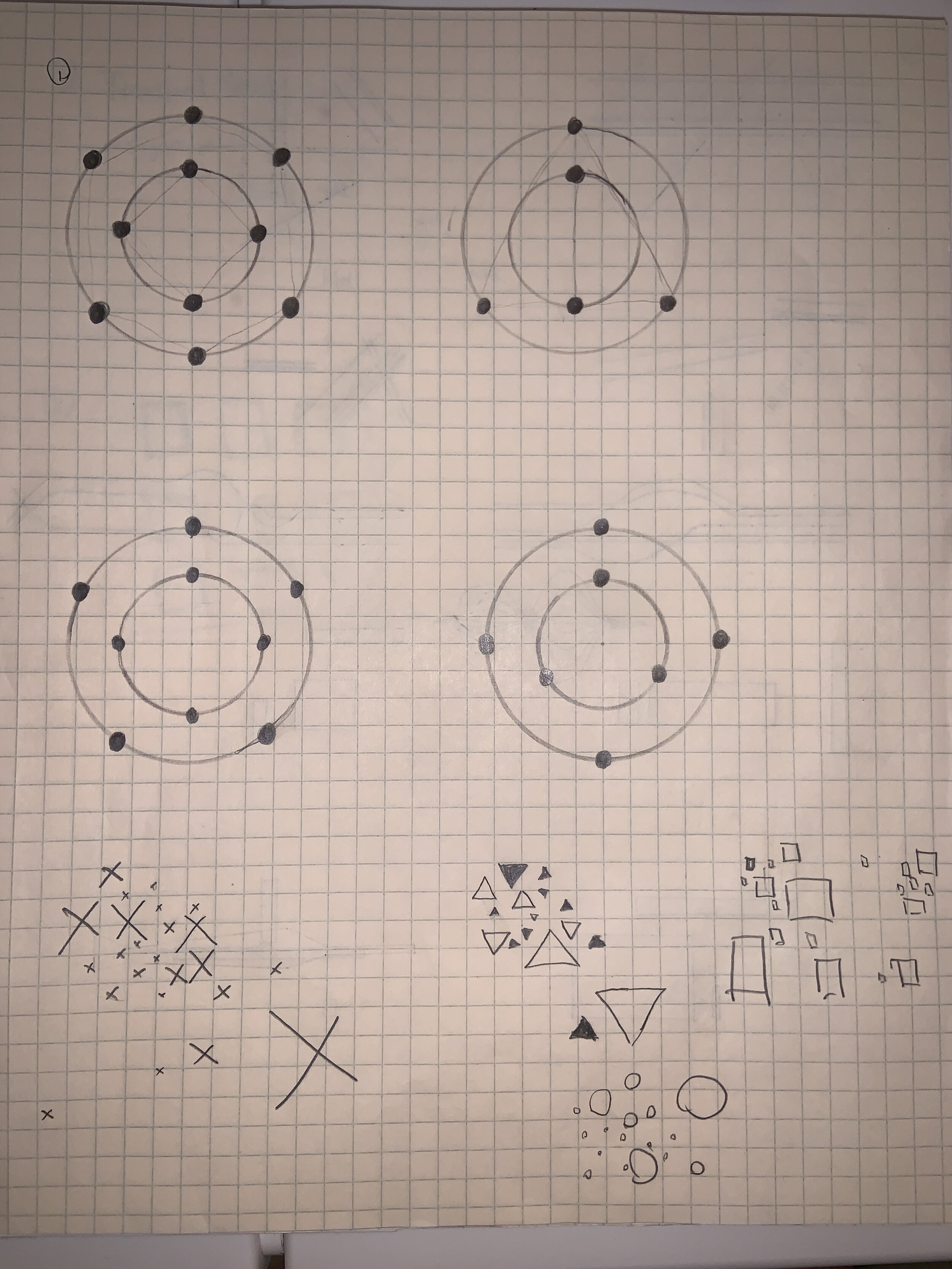

Office hour with Gabe on image detection stability of minimal patterns

solution 1 - pivot to using Max/MSP to get a working demo of concept

solution 2 - adding complex border/background to each pattern

Discussion with Tiri

waiting time between activations - listen and observe the change and progression of the composition

layout of grids as sections allows more interesting possibilities in the same section, can have multiple variations of the same pattern/instrument, adding more layers of similar sounds in the same section

certain decisions up to the players, but using rules/instructions to constrain

“The balance between the constrained and free, the ordered and open”

Form II use of color spectrum to match with audio frequencies

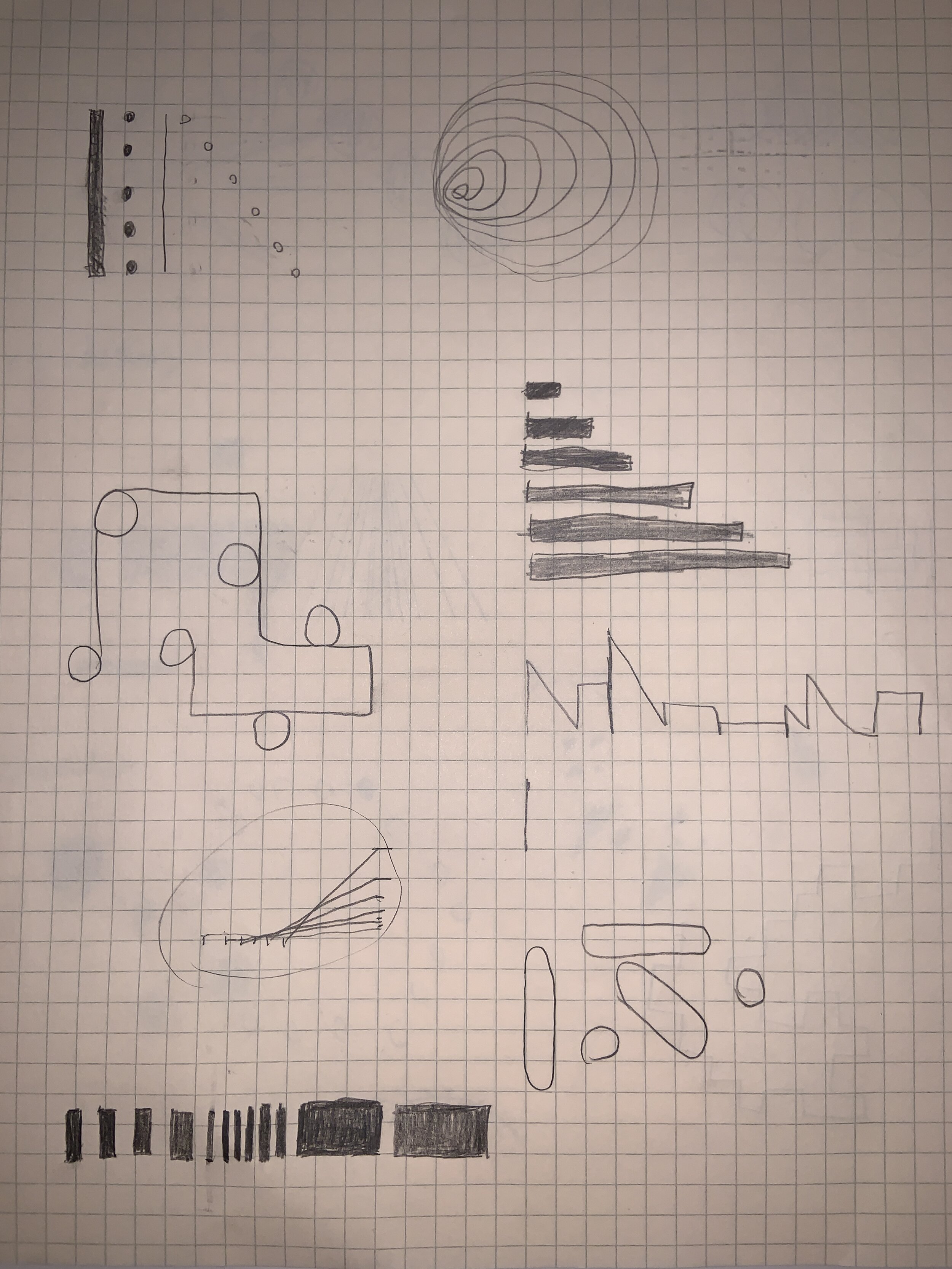

updated form III scores

Learning progress

2 hour Unity shadergraph workshop

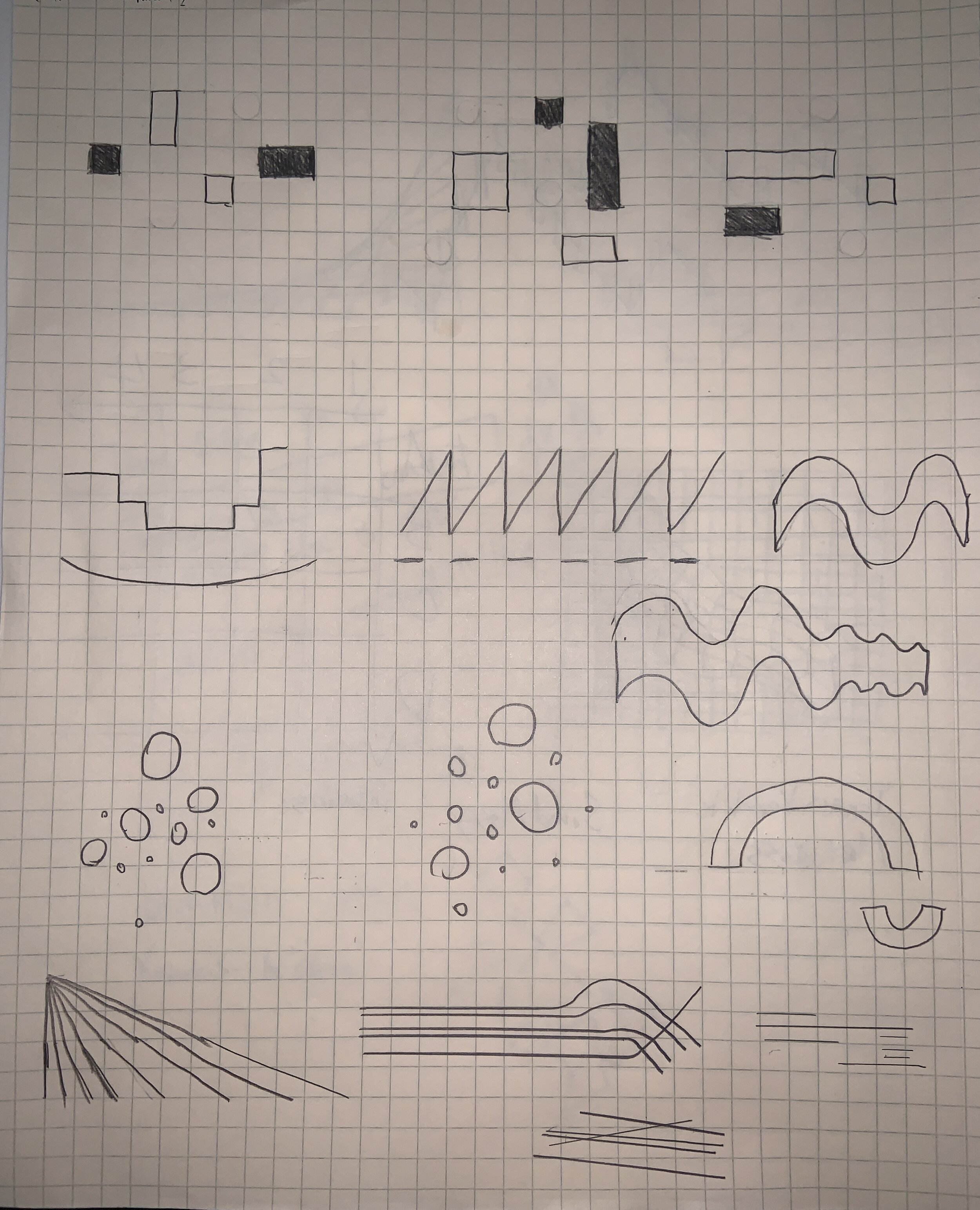

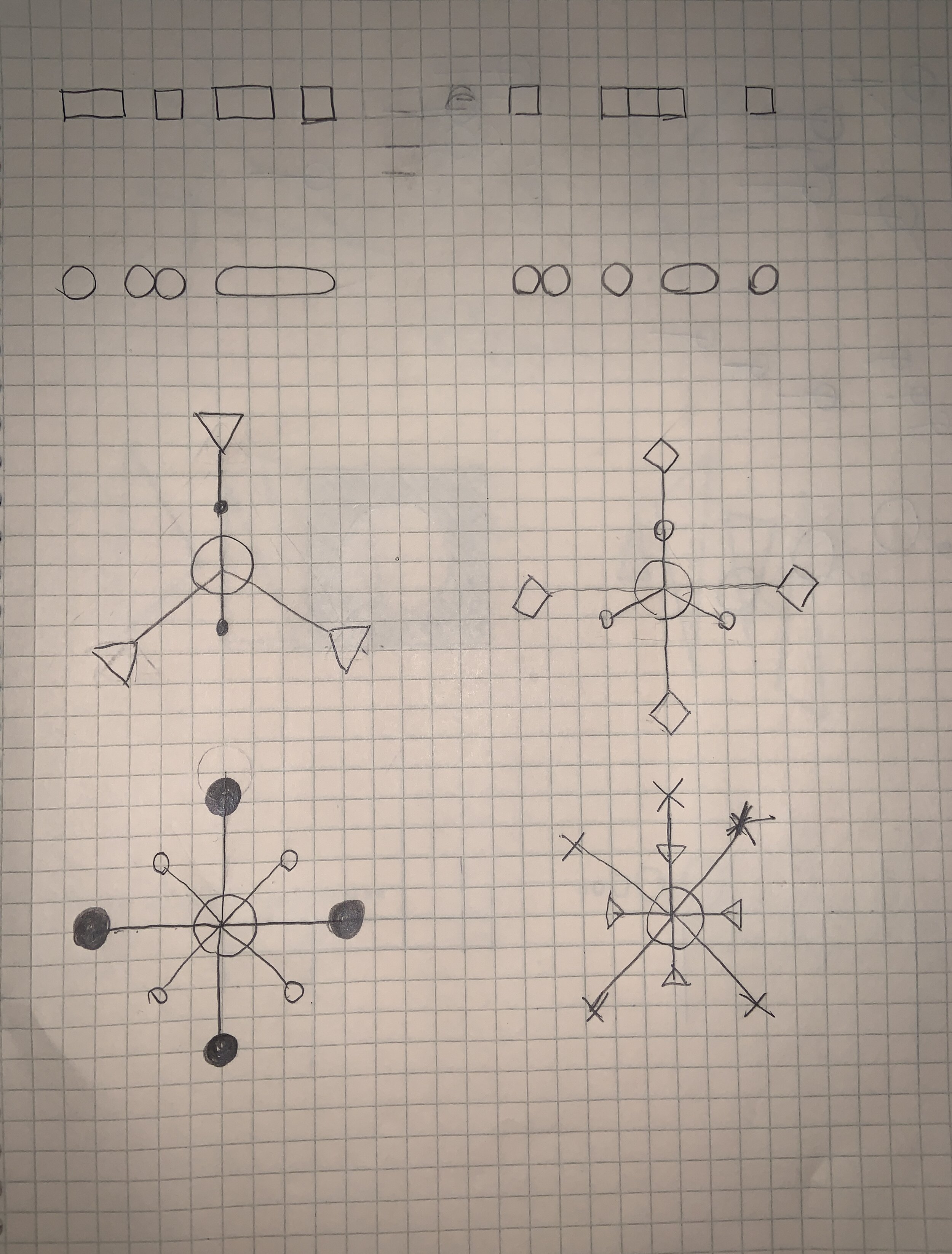

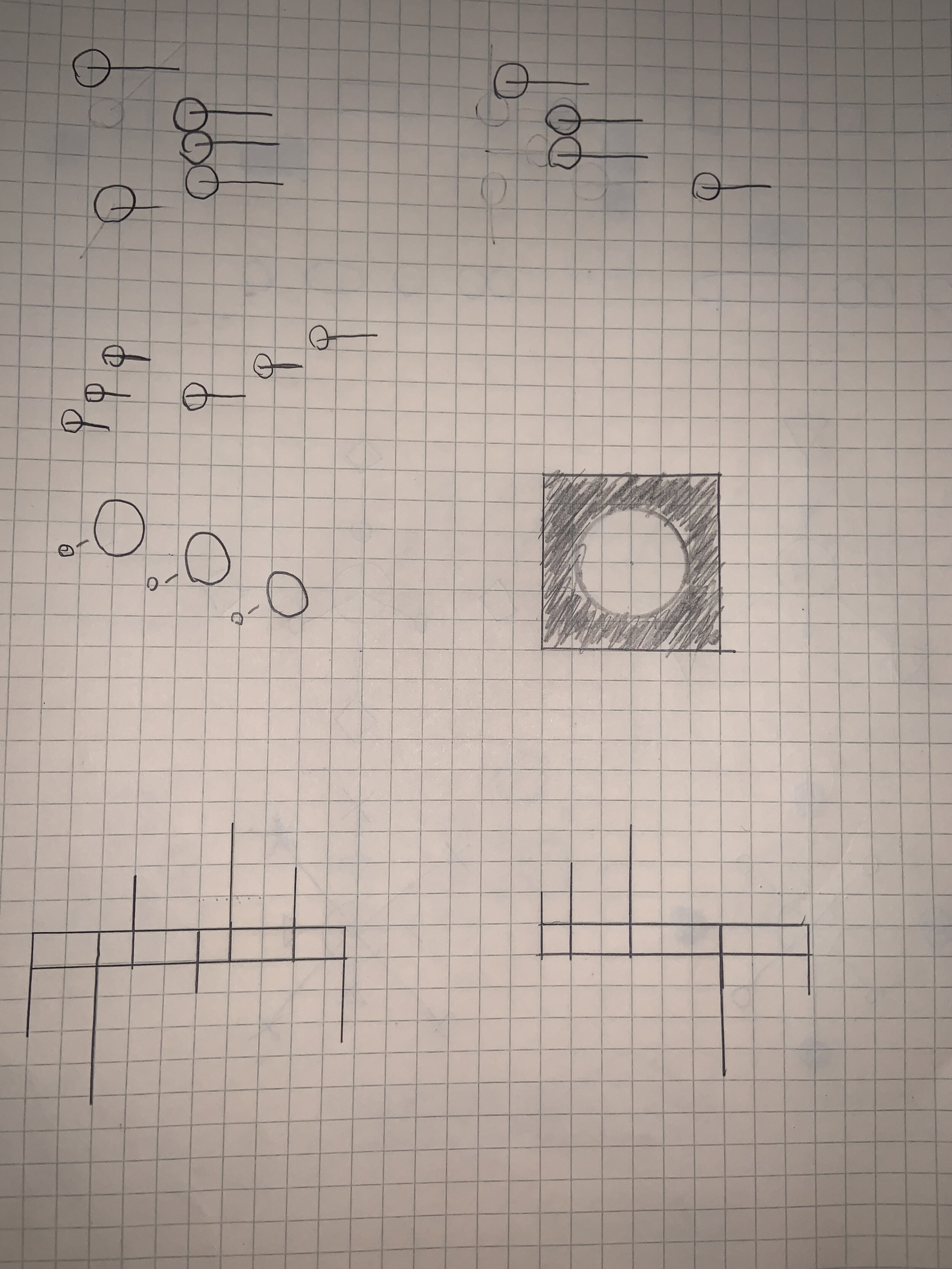

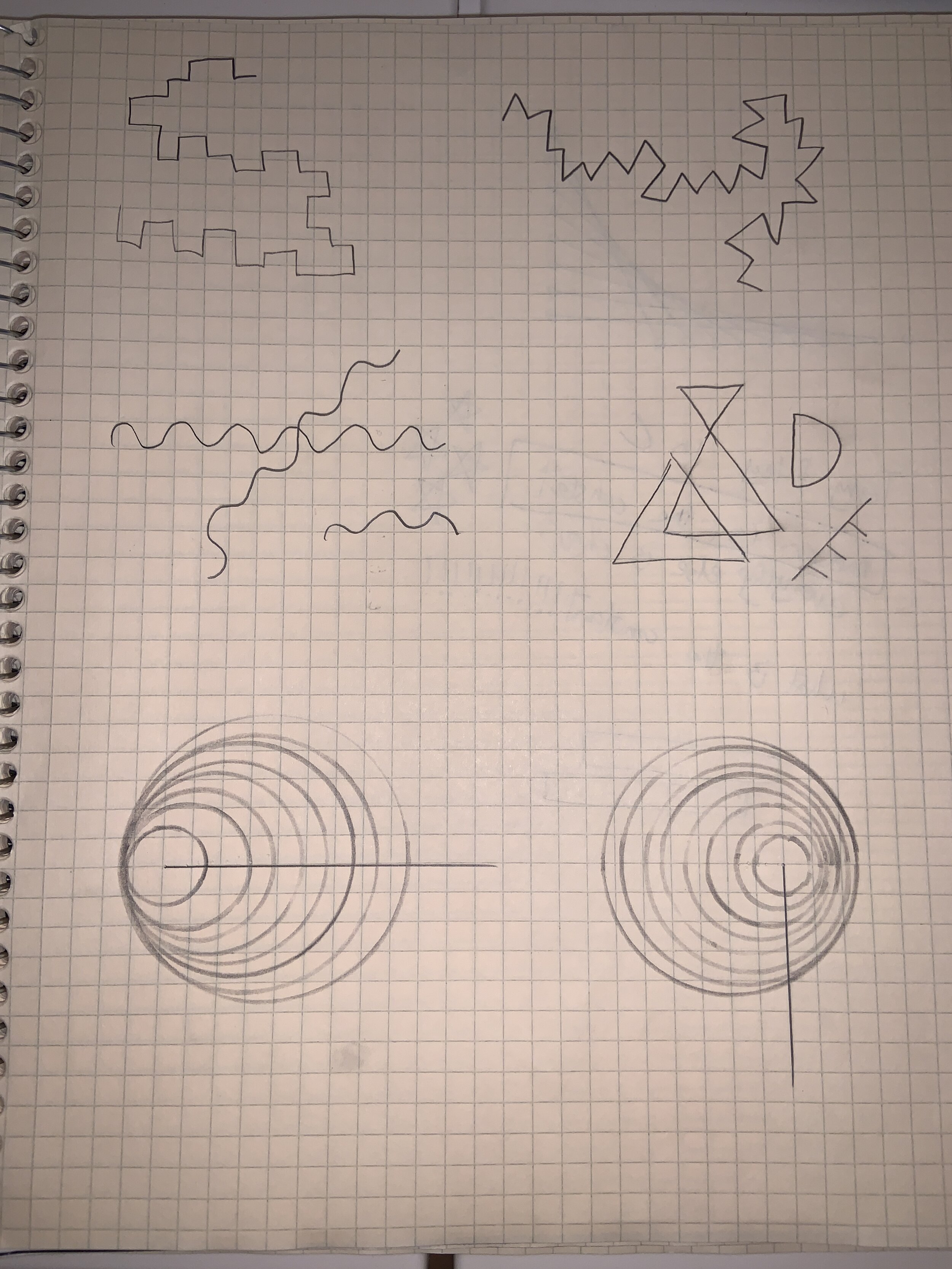

Maths for Artist class homework - sketch exploring fibonacci sequence

4/1 Wednesday - Day 17

Thesis:

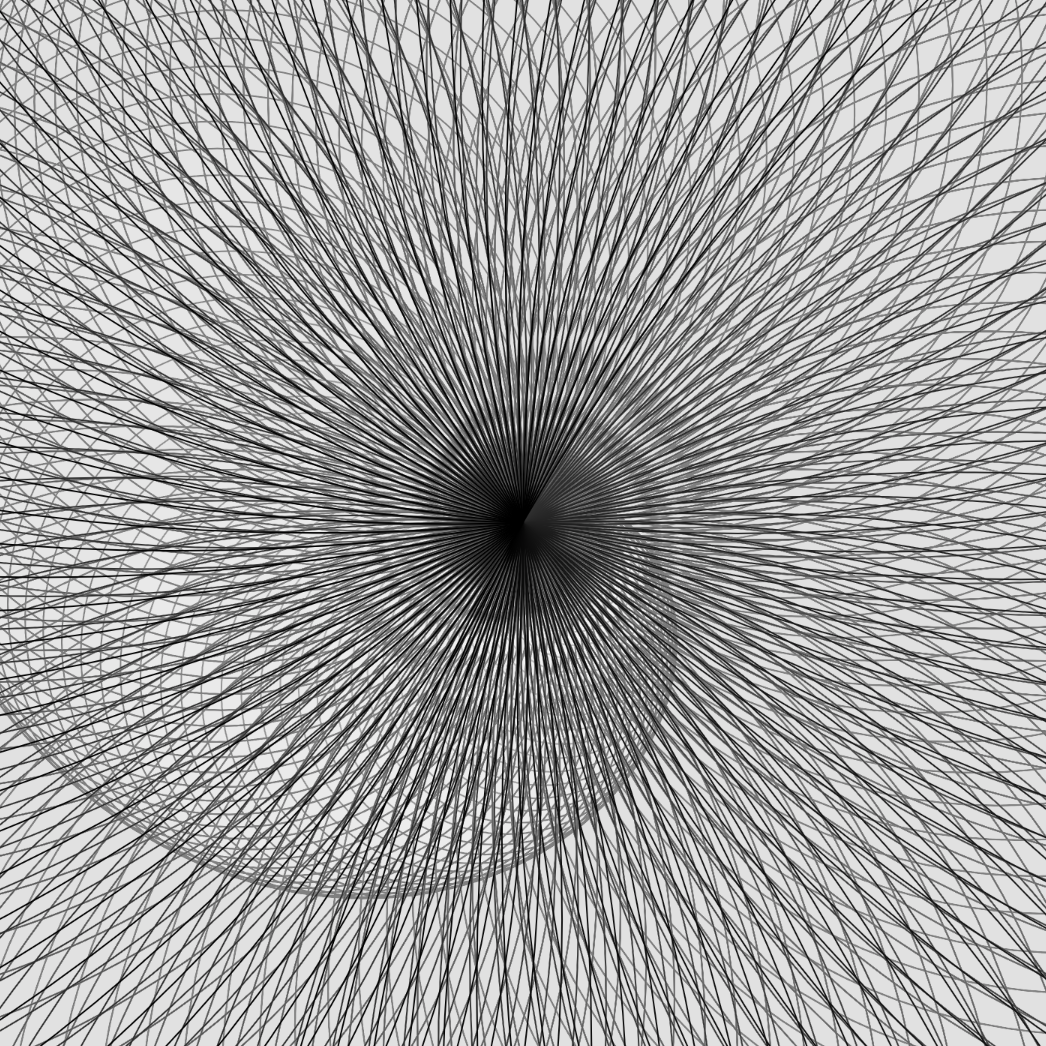

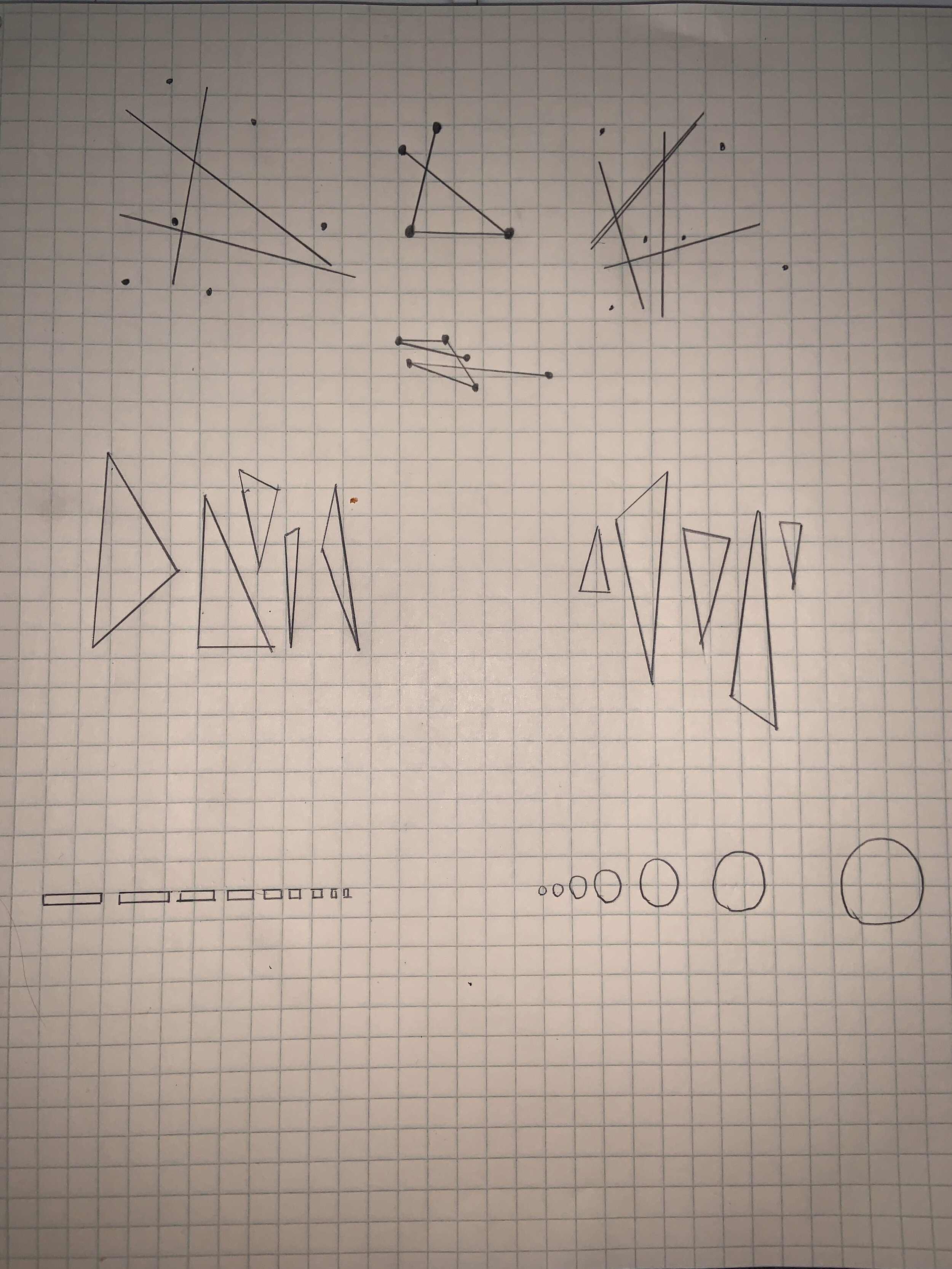

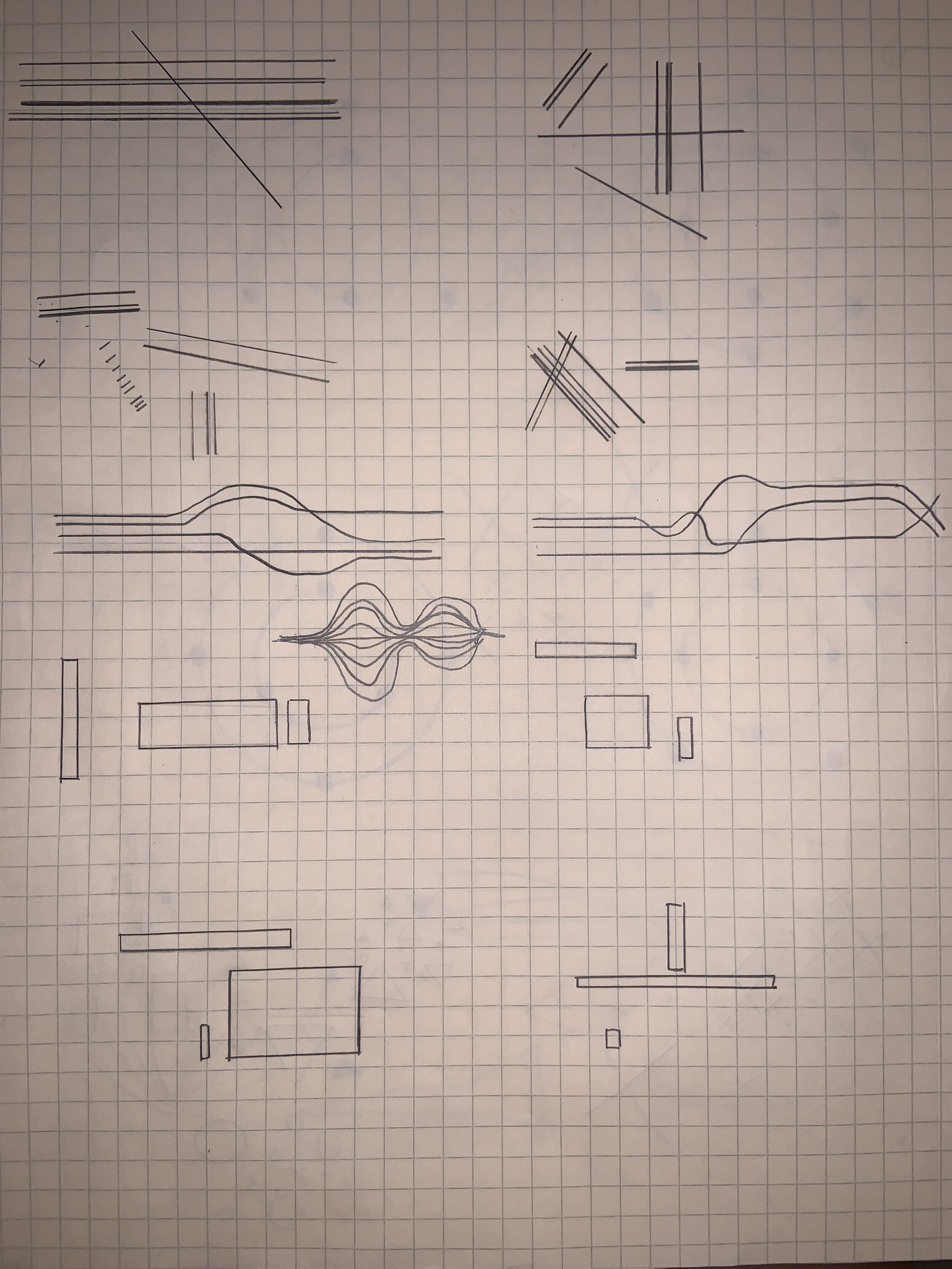

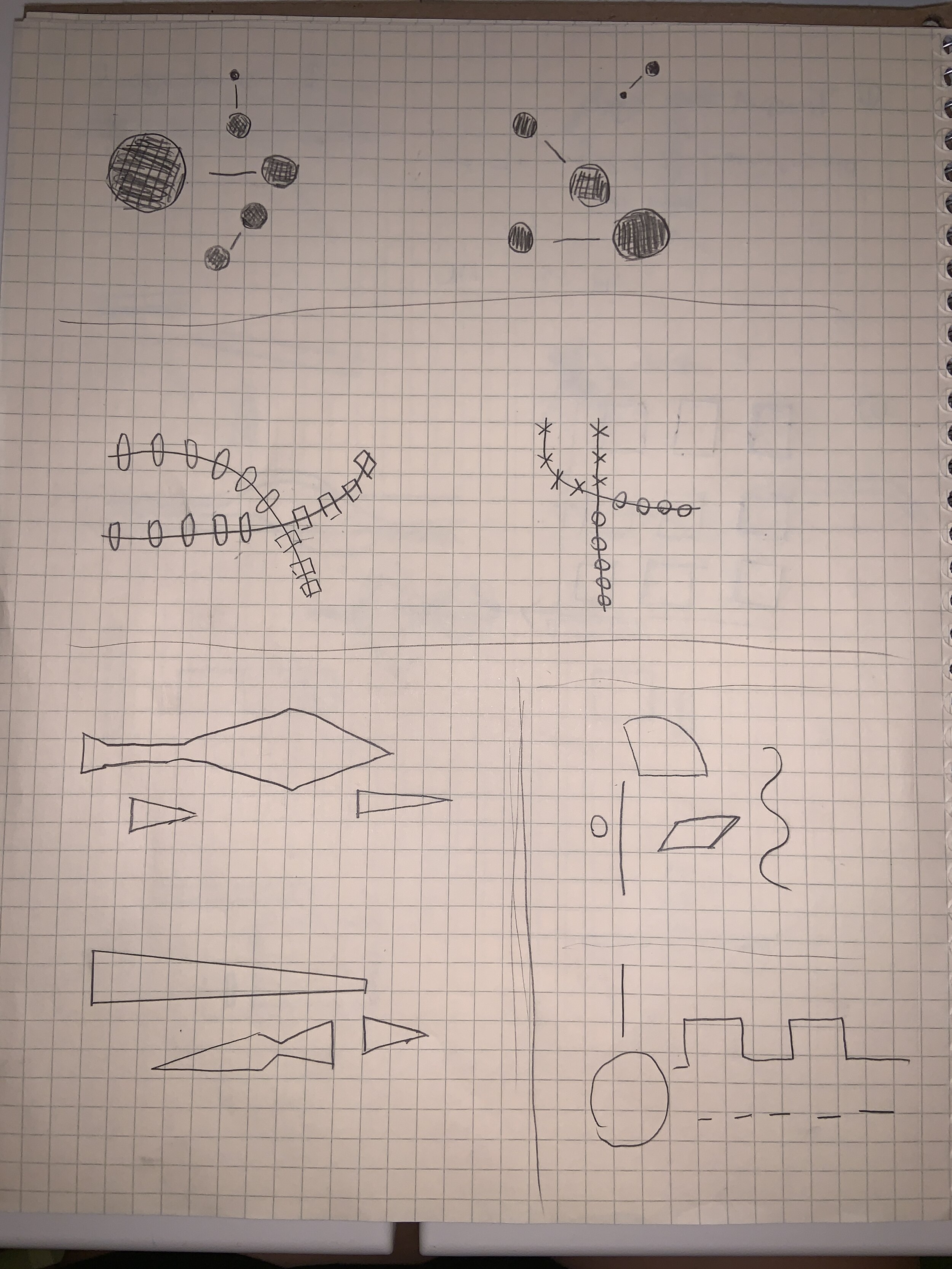

Created series of image patterns for graphic scores for form III

Meeting with Noah and Tak on the updated form III system

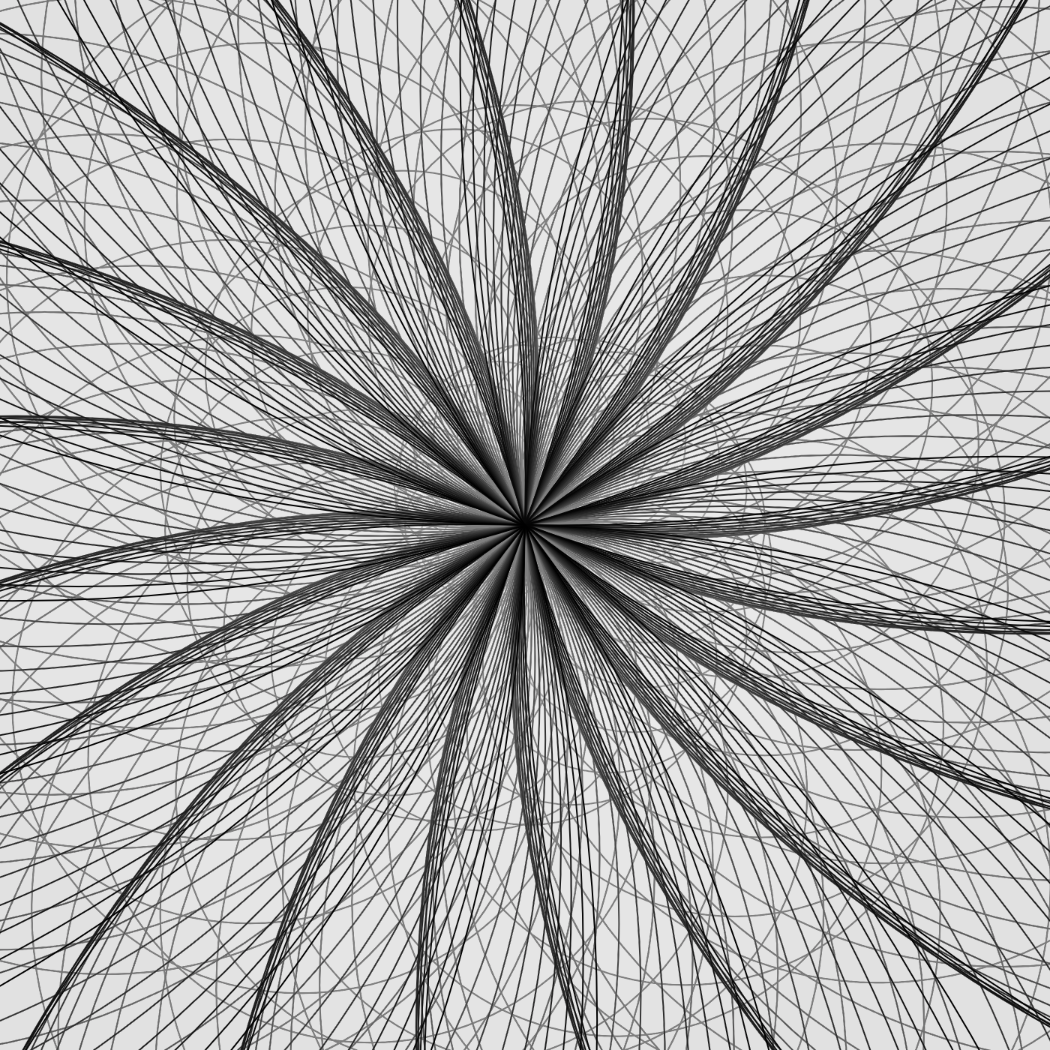

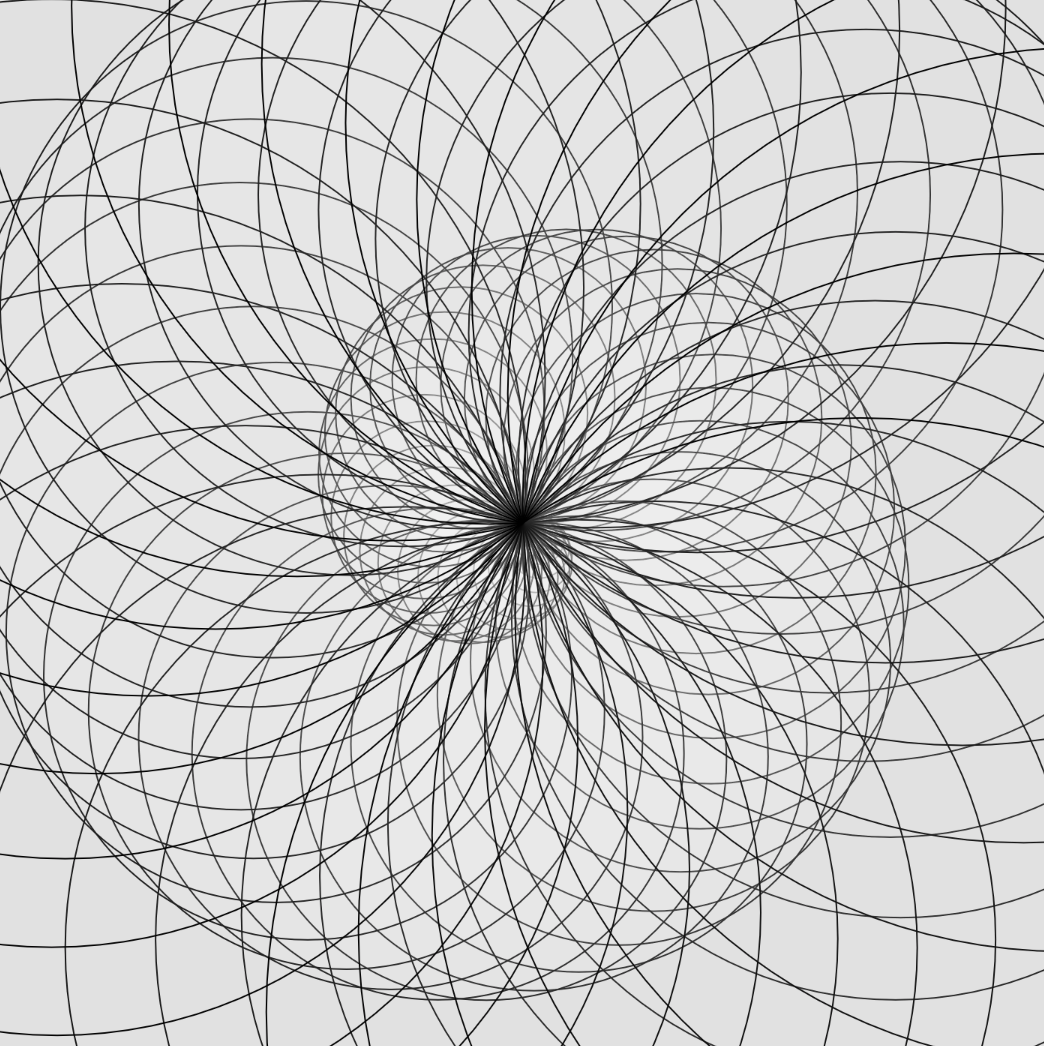

Finalized fractal images to use for form II

3/31 Tuesday - Day 16

Thesis

Office hour with Sarah + thesis group catch up

Thesis meeting with Nancy

Generated procedual fractals in Blender

3/30 Monday - Day 15

Thesis

Research on pivoting form II and form III

Studied Iannis Xenakis - Metastasis

Sound mass technique

Fibonacci sequence in music

Stockhausen - Klavierstücke, Variable form

More drawings for form III

Dinner - Fried chicken and cauliflowers

3/29 Sunday - Day 14

Thesis

ARKit vs Vuforia image tracking reliability test + comparison

3/28 Saturday -Day 13

Thesis

more research on developing system for form III

Some projects that mirror approaches of In C with small collection of elements and up to player to build into larger piece:

The repeater orchestra, loop random number of repeats before moving to the next

https://www.youtube.com/watch?v=JN0bW3ilqF4

https://nmbx.newmusicusa.org/terry-rileys-in-c

3/27 Friday - Day 12

Thesis

Meeting with Reese

Fixed audio clipping issue using granular synthesis

Tested 9 image targets

Life

finished Netflix show Unorthodox

3/26 Thursday - Day 11

Thesis:

Sketched patterns for form III

Office hour with Robby - what is the constant in the composition????

Maths for artist class - Fibonacci sequence & golden ratio, book “Quadrivium”