As I was commuting on a train thinking about the elements of rhythm, I noticed that each time a chain of cars passes by a gap or a bump in between two sections of the train track, it would create a rhythmic bumping noise like the one in this video:

https://youtu.be/MPPNqhf8fRs?t=29s

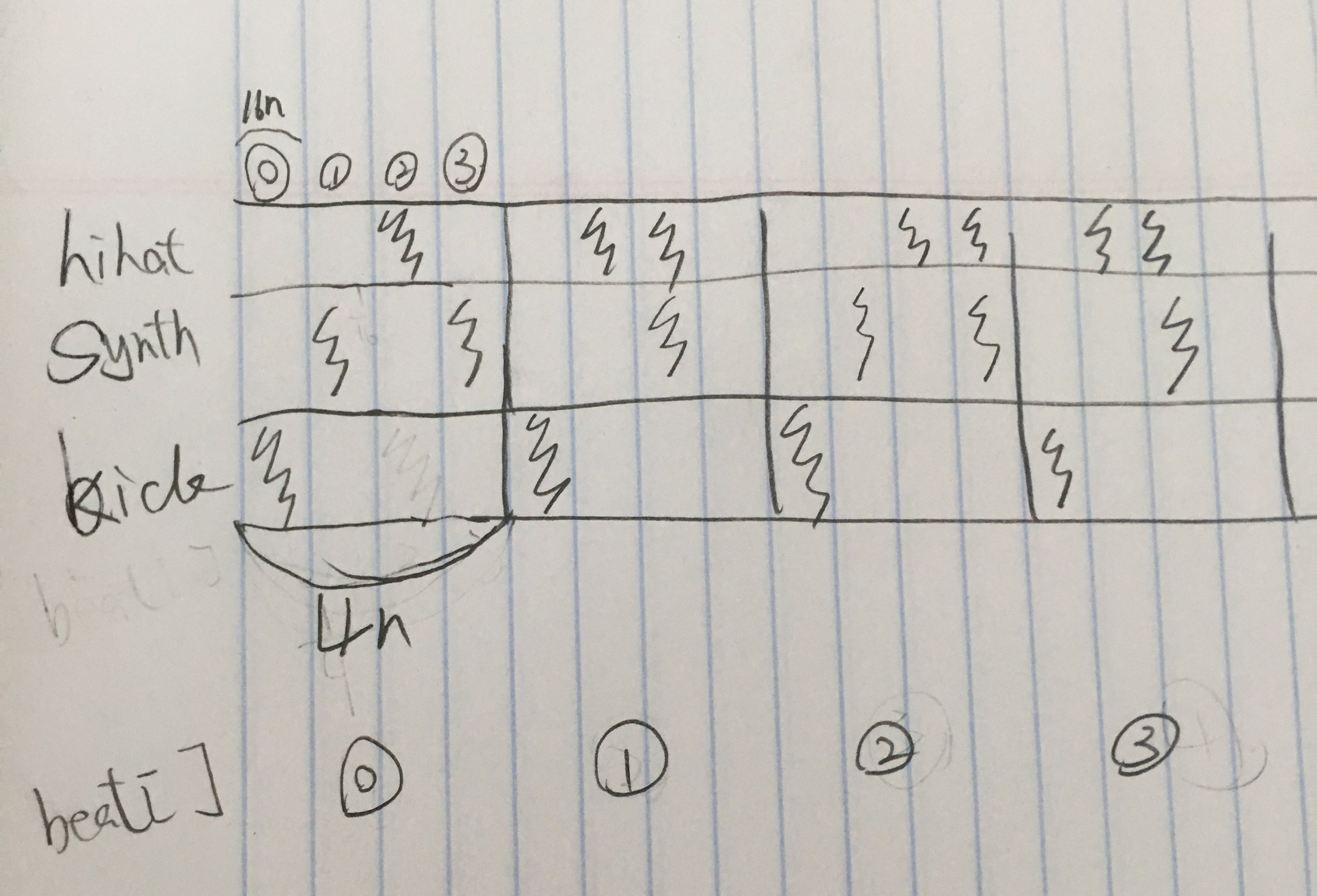

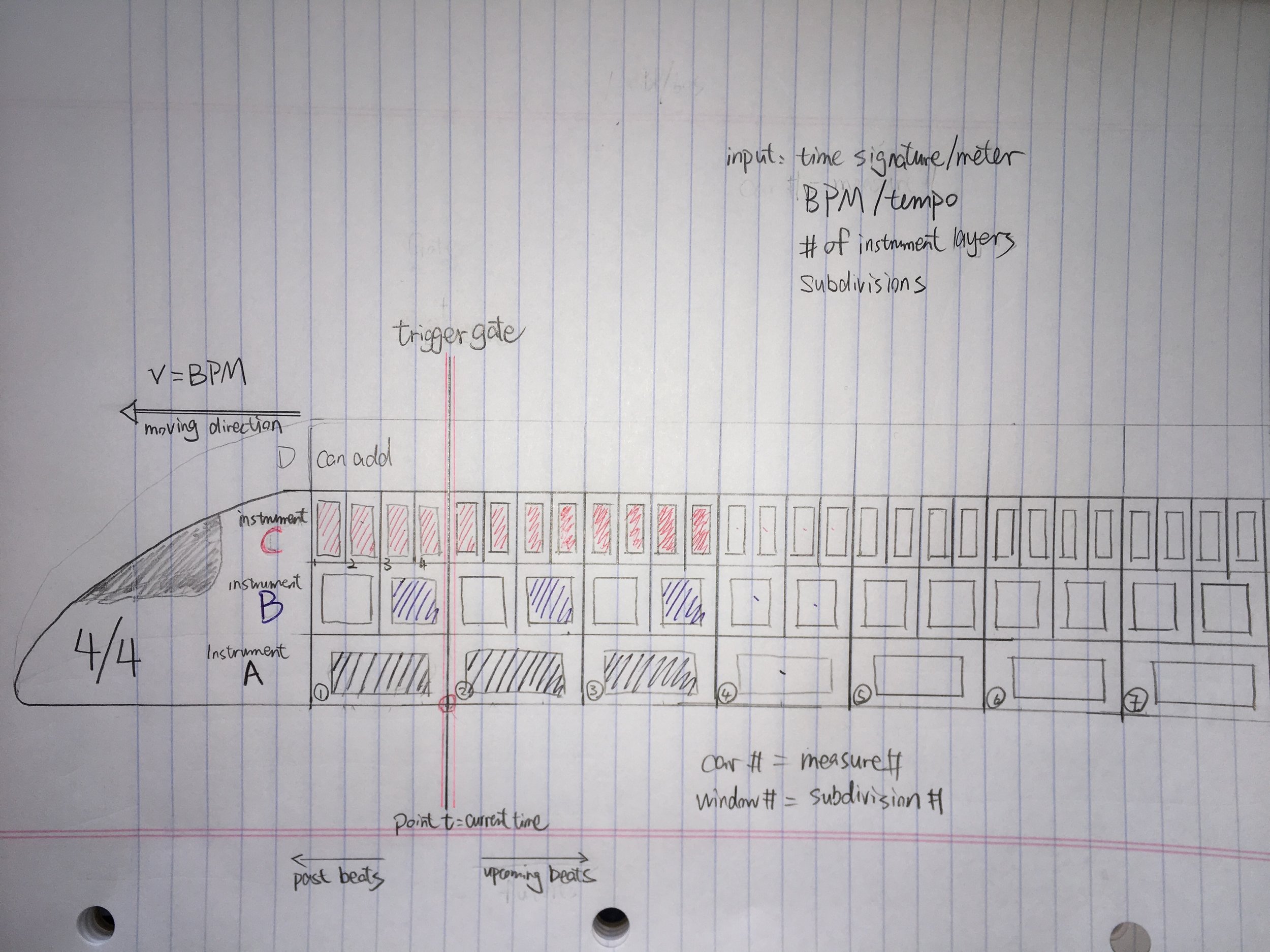

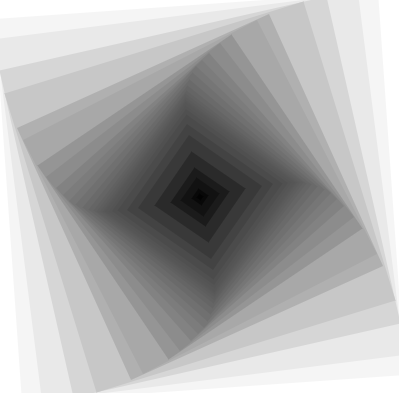

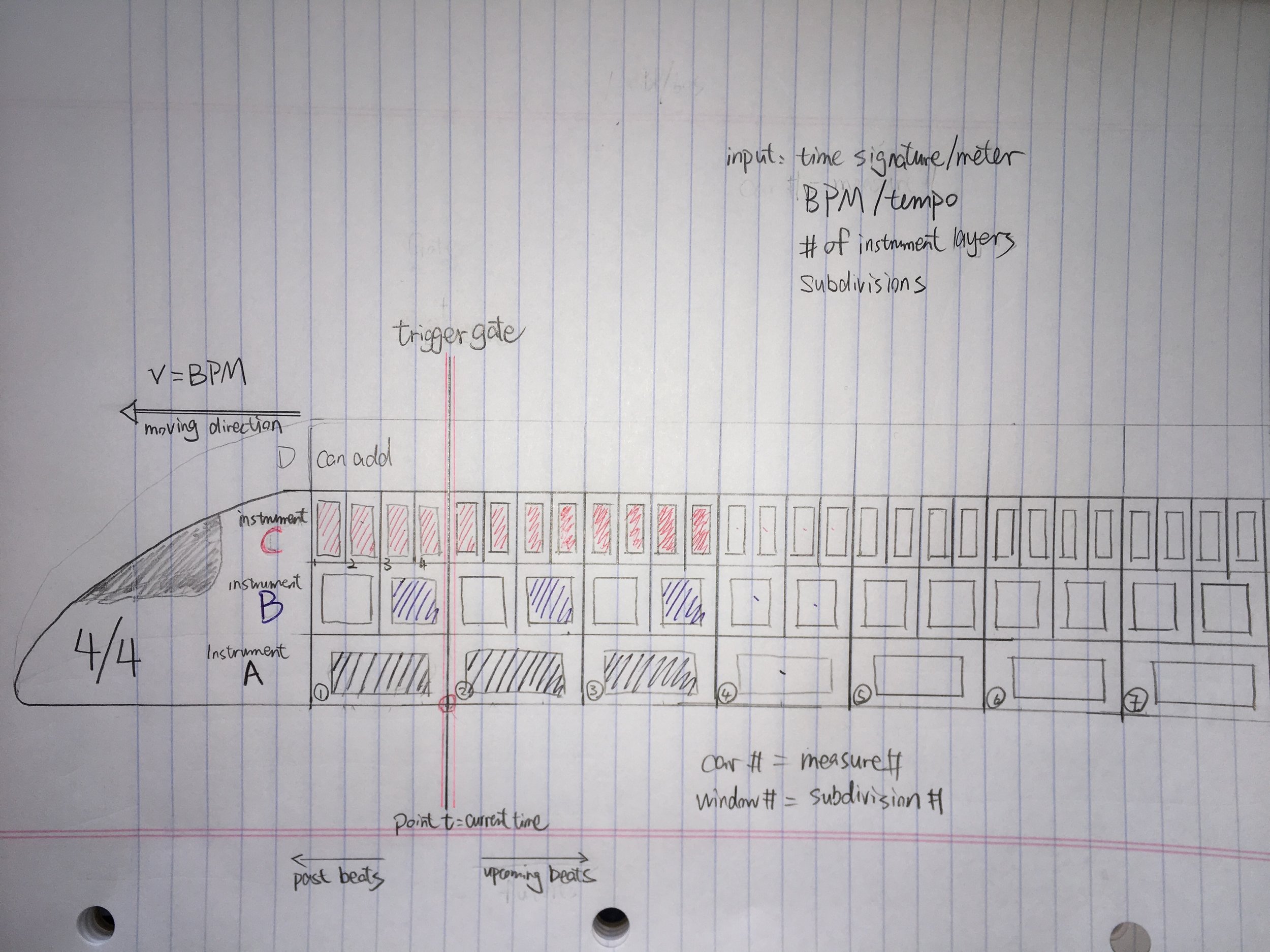

So I thought the noise is a result of a chain of cars passing a fixed point. And the speed of that rhythmic sound is a result of the moving speed of the train, as the train slows down, the pulse also slows down. So I played with the concepts of rhythms and the idea of passing train and its parameters like speed, number of cars, number of levels, numbers of doors and windows, and I was imagining a rhythm interface that is a train passing a gate that triggers different levels of sounds stored in each car. So I created this sketch:

The train interface is constructed with several parameters:

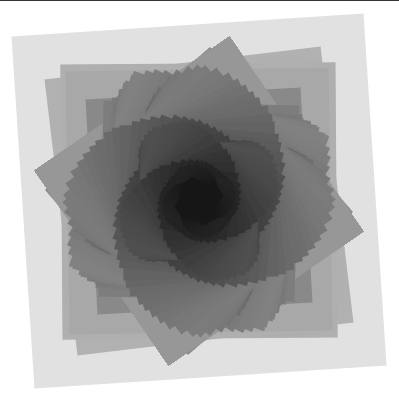

# of levels: players can import multiple beat samples, each sample track creates a level on the train, tracks stack on top of each other vertically like different levels of the train. The number of levels indicates the number of instrument tracks.

# of cars: the entire length of the train is consist of many cars connected horizontally. Each car number indicates the measure number.

# of windows: players can input a time signature at train head and divide each level of a car into smaller sections represented by the number of windows on each level, this is to mark the subdivisions(windows) in a measure(car). If a player decides the meter to be 4/4, 1 full window on a car is 1 whole note, 2 windows are 2 half notes, and 4 windows are 4 quarter notes, etc... players can then toggle on and off the lights on each window to place a beat of a certain duration at a certain subdivision in a measure, and copy a certain pattern to paste in later windows. As an example, in this loop, the most basic house/techno music rhythm pattern, the black windows in the sketch represents kick drum, blue window snare drum and red window hi-hat.

Speed of the train: players can also input speed of train in the form of BPM, the higher the BPM, the faster the train moves. for example if the meter is 4/4, BPM is 120, there is 120/60=2 beats per second. So we can imagine in every second, two windows of quarter notes would pass a certain point.

Gate: somewhere in the middle of the screen, there is also a gate, a fixed point for each car of the train to pass, acting like a trigger point for each notes(windows) carried in the car. The train travels from right to left, the gate represents current time; everything to the left of the gate is past beats; whereas everything to the right are upcoming beats to be played when they reach the gate.

----------------

Limitations I can think of right now: since I have not yet seen this moving train in action, I can not picture how fast it will move if I set the BPM to a common value like 160, if it moves too fast it will be really hard for our eyes to catch each beat and understand what is really going on in each measure.

Applications: I can see this interface being a game/instrument to be experimented by kids who are learning music and want to create their own beats by helping them visualize how rhythm works while having fun running their own train.